When you think of compute, your mind probably goes straight to virtual machines. But there are actually 5 different kinds of compute on Google Cloud.

Today we will discuss what these different kinds of compute are, how to use them, and when you might use each kind of compute.

Abstraction levels

Before we go further, let’s talk a bit about abstraction levels. Abstraction is dealing with ideas instead of events. To put this in context of todays article, abstraction means that you focus more on running your application, and less on how it is being executed.

The greater the abstraction, the less you have to worry about the environment and underlying infrastructure. And the more you can focus on the code you have written.

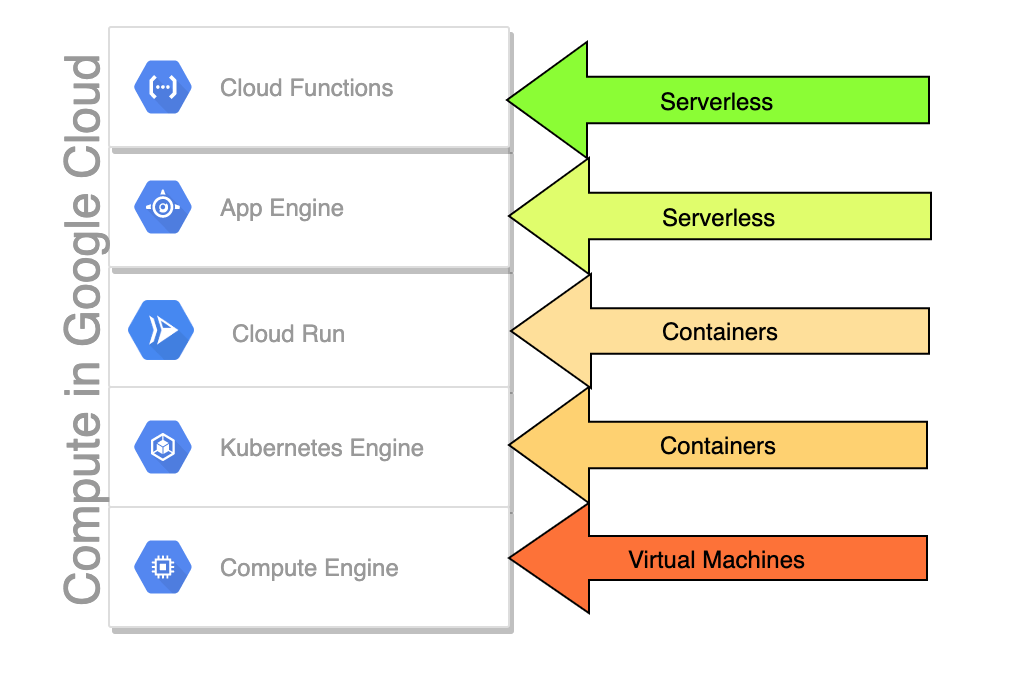

The main difference between each of the different kind of compute are the abstraction levels. The different types of compute in order of levels of abstraction are as follows:

- Cloud Functions

- App Engine

- Cloud Run

- Kubernetes

- Compute Engine

As you start at the top of the list and move your way towards the bottom of the list, you get different levels of abstraction. If you want the most abstraction, you will want to use cloud functions. And if you want the least amount of abstraction, you will want to use Compute Engine.

Billing

In the previous section we talked about how different types of compute give you different levels of abstraction. Cloud functions giving you the highest level of abstrction. Each kind of compute also has different ways of handling billing

Cloud functions and app engine are billed based on usage. Meaning, each time you access the function/app, that counts as a request. You are then billed based on the number of requests.

Compute and Kubernetes are both billed based on resource utilization. Meaning you pay for CPU, Memory, and Storage usage.

Cloud run is a combination of these two billing models. We get into more detail about that when we get to the cloud run section.

To Summarize, these are the billing types for each compute type:

- Cloud Functions – usage

- App Engine – usage

- Cloud Run – usage/Resources

- Kubernetes – Resources

- Compute Engine – Resources

Compute Engine (Virtual Machines)

Compute Engine gives you virtual machines. This means you have a full operating system full access to the CPU and Memory. And you have the greatest flexibility of what to run within your virtual machine.

Compute engine is used by Google for building a lot of Google services. For example:

- Cloud SQL

- GKE

- Dataflow

- Dataproc

- Cloud build

- etc…

Things you should be thinking about

Compute instances are the lowest level of abstraction, so you are responsible for everything related to this machine. This includes, but is not limited to :

- Your software

- Operating system

- CPU, RAM, Disk

- Networking: Firewall loadbalancing, etc..

When to use Compute Engine

Compute Engine is the lowest level of abstraction. This means you have the most flexibility. But you also have the most management overhead. For these reasons, you should only use compute engine if your application can’t fit into the other compute models.

There are two primary reasons to use compute engine:

- Existing application that does not run in a container

- Speacial hardware/Software requirements

Compute Engine machines give you a lot of flexibility as far as hardware and software go. You can build machines with up to 160 vcpu and 12 TB of memory. You can inclue GPU’s. And you can install any operating system you want.

Compute engine may be a fit if some some of the following are true

- Existing system

- Require 1:1 container VM mapping

- Speacial Licensing requirements

- Running databases

- Require network protocols beyond HTTP/s

Compute Engine Constraints

- Scaling speed (Minimum 20 seconds to scale out)

- Have to manage all updates yourself

Compute Engine Benefits

- Good number of pre-built images

- Can run whatever OS you want

- Can run whatever applications you want

- Can use whatever protocols you want

- Reads are highly paralellized. So read performance is really good even with non-SSD storage

- You can run docker containers directly on container optimized OS if you prefer not to use GKE (Google Kubernetes Engine)

- Hardware highly customizable

- Can resize disks without rebooting instance

- Can change all other hardware characteristics with a reboot

Managed instance groups

Manged Instance groups are a way to simplify managing your compute instances. They are similar to autoscaling groups on AWS. Some features of the managed instance groups:

- Template/image based

- autoscaling

- regional groups (Multi-Zone)

- Rolling updates

- Load balanced

Managed instance groups allow you to setup a load balancer, attach a template/image to the load balancer, then auto-scale the requests across multiple zones/datacenters within a given region.

Kinds of machines

If you need to use Compute Engine to run virtual machines, it is good to know about the two different machine types:

- standard

- pre-emptible

Standard machines have Google’s standard SLA. As of the time of this article, that is 99.95% uptime.

Pre-empible machines do not come with the same sort of SLA. Instead, they are guranteed to be restarted ever 24 hours. And they could be restarted at anytime with little/no notice from Google.

In exchange for these caveats, you get up to an 80% discount when compared to standard virtual machines.

As you are planning out your infrastructure, if you find that you need compute engine machines. But, your app is written in such a way that you can survive your machines restarting, pre-emptible machines will give you a good bit of cost savings

Discounts

If Preemptible machines don’t make sense for you, there are two other kinds of discounts on Compute that are offered by Google

- Committed Use Discounts

- Sustained use Discounts

Sustained use Discounts kick in automatically, and give you up to 30% off the cost of your compute instances.

Committed use Discounts require some effort on your part. You will need to contact Google to set these up. As of the time of this article, you can commit to either 1 year or 3 years of compute usage. In exchange you can get more than 50% off the list price.

Committed use discounts are at the project level, not the billing account level. So be sure to think things through before making your commitments.

Containers

Containers are a newer technology that allows developers to ship applications that are ready to run without additional dependencies

This technology allows the developer to run the container on their workstation, then run the application in production with high-confidence that it will work as expected.

Kubernetes is a popular container management tool built by google to help development and operations teams run containers at scale.

Kubernetes (GKE)

GKE is Google’s managed Kubernetes service. With a few clicks, Google will spin-up a Kubernetes master for you, then provision one or more compute instances with the Kubernetes agent on it.

Once your Kubernetes cluster is live, you can start running your containers on it.

Kubernetes Architecture

There are three main components in your kubernetes cluster

- Node

- Pod

- Container

The node is the server with the operating system running the kubernetes agents and docker application.

The Pod is a logical unit that has its own IP address that runs containers inside of it.

Finally, the container which is your sandboxed application.

Every node contains at least one pod and every pod contains at least one container. Every node can contain multiple pods and every pod can contain multiple containers. However, a given pod will never have more than one copy of a given container. You can use pods to group complementary containers for a given application.

The master node in the Kubernetes cluster is in charge of scheduling containers and pods on the various nodes.

As you deploy containers on your Kubernetes cluster you will create services. These services will load balance to the various containers running that application. When you want to access the application you will access it via the service IP.

Types of GKE Kubernetes Clusters

There are two types of Kubernetes clusters on GKE

- Zonal

- Regional

Regional clusters put a minimum of one node in each zone (Datacenter) within the given region. This gives you a good amount of regional resiliency since you will always have nodes in at least three zones.

The Zonal cluster requires you to choose what region and zone to run the cluster in. These clusters run in a single datacener in a single region. So their resiliency is not as good.

Benefits of GKE

There many benefits of running Google Kubernetes Engine when compared to other methods of running containers. These benefits would include but not be limited to:

- cluster autoscaling

- Google runs the master node for you and manages updates

- Can have a cluster up and running in around 5 minutes

- enables high utilization of nodes

- Good compromise in the different levels of abstraction

What to think about

When running Google Kubernetes engine you’re going to be focused on the logical infrastructure and applications. You will not be focused on the computers or the containers themselves. This is because Kubernetes takes care of this for you.

You want to think about what programs you are running. How those programs are connected And where those programs store their state.

As we said in the previous section, Kubernetes should be thought of as stateless. If your application is not stateless then there are certain things you will need to do in Kubernetes to facilitate that.

Kubernetes is configured using YAML files. You will need to think about how you will dictate the configuration of your infrastructure using this declarative language.

To Summarize, you will need to think about:

- Logical infrastructure: Applications, not computers or containers

- What programs? How are they connected? Where do they store state?

- Configuration using YAML files

Good fit for

- Need to run in multiple envrionments (Dev machine, multi-cloud, etc..)

- Take full advantage of containers

- Have or want CI/CD pipeline

- Need network protocols beyond HTTP/S

Constraints

- Must use Containers

- Licensing (Some things are licensed per instance or CPU)

- Some architecture constraints

Cloud Run

Cloud run is a fairly new compute type on Google Cloud. You can think of Cloud Run as a combination of Kubernetes and Cloud functions. in order to run an application on Kubernetes, you have to first create your container then you have to create a service on Kubernetes to run your container and then you have to deploy your container onto the cluster to run as that service.

On the other hand, Cloud functions are much easier. With Cloud Functions, you create your Cloud function insert your code set up the trigger to run the function, for example, the URL and you are ready to use your function.

The problem is cloud functions is you are limited in the languages and Frameworks you are able to use.

you do not have the same limitations when running containers on Kubernetes. But as we just said there is more work to set up your application on Kubernetes than if you were to write it on a cloud function.

Cloud run allows you to take a container and deploy it as easily as you could deploy a cloud function. Once you are container is created and set up in the registry it just takes a few clicks to deploy that container as a cloud run application. Once the application is done deploying you are given a URL for accessing that application.

Benefits of Cloud Run

As we just said Cloud run is a cloud function backed by containers. As you might imagine there is a whole lot of benefits from using this compute type. These benefits include:

- Serverless agility for anything you can put in a container

- Given URL to a container image

- autoscaling all the way down to 0 nodes

- SSL Termination

- Pay only what you use with 100ms granularity

- Can handle multiple requests from the same instance (Don’t pay per request)

- Does not have the same language/framework limitations of cloud fucntions and app engine

Fully Managed vs Kubernetes

There are two ways to run your containers on cloud run. There is the regular way that runs as I just described. Think of this as the hosted or fully managed version.

Or, you can run your containers on your existing Kubernetes cluster.

If your developer has anyone application that they just want to try out they should just use the standard way of running Cloud Run containers. however if they have special requirements for their application you might want to consider running the application on your Kubernetes cluster.

By running your container on your Kubernetes cluster you have several benefits. The first one is networking. Cloud rain containers by default can only access the internet. You can set up rules to restrict who can access this application. But it is still only accessible through the internet.

By running your containers on your Kubernetes cluster your application has the ability to access your private IP address space. this will allow your Cloud run application to be accessible by applications that cannot ride over the internet. It will also allow your Cloud run application to access applications that are not available on the internet.

If your Kubernetes nodes have special hardware, for example, GPU, your Cloud Run application can access that GPU. This is not available through the standard Cloud Run.

It is very simple to move your application from the standard deployment model to running under Kubernetes cluster or to move it back. All you have to do is redeploy the cloud run application and select a different option.

Limitations of Cloud Run

As we’ve discussed there are many benefits to using Cloud run. The main drawbacks of using Cloud run are

- Have to run in a container

- Only support HTTP/S requests

- No Persistent storage within container

- Application has to be stateless

Cloud Functions

Cloud functions are similar to Lambda on AWS. They are event-driven and run a set piece of code every time it is invoked. There are many ways to trigger Cloud functions Including but not limited to:

- pub/sub

- cloud storage

- http requests

Cloud functions are similar to Lambda on AWS. They are event-driven and run a set piece of code every time it is invoked. There are many ways to trigger Cloud functions Including but not limited to

Cloud functions are my favorite kind of compute. I prefer to use them anywhere I can. I think of cloud functions as truly being serverless. in all of the other types of compute, there are concessions you have to make related to management.

With Cloud functions, if you are using a supported language and framework there are few concessions to be made.

With Cloud functions you pay per execution of the function. You do not pay for run time. this makes them very cost-effective to use for many tasks. In addition to that Google does not charge you for cloud function executions until you reach a minimum number which is quite High. Many environments can use cloud functions for no charge.

If you’re writing a truly cloud-native application, then you would use cloud functions similar to how you would use functions within a traditional application. Your first function would call your second function then take what is returned and call it a third function. This makes your application infinitely scalable.

Cloud functions can read and write to storage buckets read and write to message queues make and receive HTTP requests and read and write two databases. this can all be done within whatever security context you define.

Unfortunately, with Cloud functions, it is not easy to port your existing applications to run on them. Rather, you need to rewrite your applications to take advantage of cloud functions.

Limitations

- Limited in the languages it can run in

- must interact via events

- Can’t Lift & Shift applications easily

Benefits

- good for serverless, don’t worry about runtime environment

- good for data transformations (ETL) or transactional things

- can be used to glue things together via HTTP

- Pay only for what you use

- Autoscales

- integrates with stackdriver logging

cost

- You don’t have to think about the infrastructure at all

- You only pay for what you use

Google App Engine

Google app engine has been around longer than Google cloud has been around. It is a service for deploying serverless applications with minimal effort.

Google app engine has been around longer than Google cloud has been around. It is a service for deploying serverless applications with minimal effort.

In terms of layers of abstraction, it falls between Cloud run and Cloud functions.

the benefits include auto-scaling application versioning traffic splitting between different versions of the application and you don’t have to manage servers..

When deploying your app engine application you start by selecting the runtime you want to use for example nodejs or python.

Ffter you’ve selected your run time you load your application code press go and your application is deployed. In terms of level of effort, it is pretty close to Cloud functions.

It has the benefits that you don’t have to build a container in order to deploy your application. And it has the benefit of supporting more languages and Frameworks than Cloud functions support..

There are three versions of app engine. There is standard, which you can think of as the Legacy version. It has been around the longest. Next to there is the second generation standard and finally, there is flex.

Both flavors of standard run within containers. After you deploy your application, it dynamically builds the containers using the runtime you specified and then deploys your auto-scaling application.

If you choose flexible, your applications are instead run on full virtual machines. This gives you additional flexibility in how your application runs. But it will drive up your costs. In addition, virtual machines don’t scale as fast as containers.

So it will also affect the speed in which your application can scale up and down which will further increase cost and possibly reduce application responsiveness and reliability.

App engine is not as well suited for extremely low traffic applications as other compute types like Cloud functions or Cloud run.

This is because app engine ever scales down to not running anything. It always keeps the minimum number of nodes running. Whether that be virtual machines or containers.

App engine is optimized for web applications

Benefits

- Autoscaling

- Versioning

- Traffic Splitting

- No servers to manage, patch, etc..

3 different execution environments

- Standard

- 2nd generation standard

- Flexible

Recommended to run in 2nd generation standard unless you need specific VM sizes (Flexible) or have legacy code (Standard) Or if you need something more than HTTP you can use Flexible

What to think about

- Code

- HTTP Requests

- Versions

Good fit

- HTTP

- Stateless

- scaling

Contraints

- Limited languages (PHP, Go, Node, HTTP, Python, Java)

- Flexible

- Docker Contraints

- Not best for very low traffic

Why choose app engine?

- Developers focus on code

- optimized for web workloads

- great for variable workload

Move applications between compute types

Depending on the application you were trying to move and which compute type you’re trying to move between it could be easy or difficult similar between computing types.

As a general rule, it is easier to move from a more abstracted layer of compute to a less abstract layer of compute.

Meaning it is much easier to move an application from a cloud function to a compute instance than it is to move from a compute instance to a cloud function.

This is not a hard-and-fast rule. It is more of a function of what you might be running in compute engine and why you chose to run it and compute engine in the first place.

As we covered in the previous sections, Cloud functions, app engine, etc… are limited in the frameworks they support. If you happen to have a python application or JavaScript application running in a compute instance, you very well may be able to migrate that to a cloud function.

However, if you have an application written in C++ and running in a compute engine instance, you will have a difficult time migrating that to run are the cloud function or app engine application. In fact, you cannot migrate it, because C++ is not a supported language.

Depending on the nature of the application you very well may be able to move it to run as a containerized application.

There are not a lot of limitations in the applications you can run in containers. As long as the application can be run through a command-line interface on Windows or on Linux, you can run it in a container. It is a best practice for your application to be stateless when running in a container.

However, it is not a hard-and-fast rule. For example, you can run MYSQL in a container. MySQL uses sessions that are stateful. Running applications like this in a container can be problematic and you have to plan accordingly.

If you want to migrate an application from compute engine to kubernetes or Cloud run you will need to create the container and push the container to a container registry. Once your application is in the container registry, you can easily deploy it to run on Kubernetes or on cloud run.

If you have an application running on kubernetes it is very easy to move it to Cloud run and vice versa. As long as your container is saved to Google container registry it is just a matter of few clicks in the console or modifying a couple of yaml files and you have retargeted your application to run on a different kind of compute.

If you want to migrate your application from compute engine or from a containerized application to run on app engine or on cloud functions you will need to check to see if the language and framework you used to create your application are supported on cloud function or on app engine.

Summary

As we discuss the five primary types of compute on Google Cloud. Each type of compute targets a different level of abstraction and has its benefits and drawbacks.

The most flexible type of compute is compute engine which gives you virtual machines. In Virtual machines, you have total control over what is done on those machines. You can choose a custom operating system or you can use out of the box machine images.

The highest level of abstraction are Cloud functions. These, of course, give you the least amount of flexibility. But they are also the lowest cost and require the least amount of management overhead.

in my opinion, Google Kubernetes engine gives you the best mix of flexibility versus manageability. You get to customize the individual nodes that run your containers. However, Kubernetes handles all the provisioning d provisioning scaling etc for you.

once your Kubernetes cluster is configured and running there is very little management overhead and it is easy to deploy additional applications. All that is required are a few extra lines in a YAML file.

If you want to use containers but Kubernetes is too complicated than Cloud run gives you a very simple way to run your containers. And if you need something slightly more flexible than Cloud functions then app engine is a nice compromise.

There is no right or wrong place to run your applications. Rather you have to weigh the benefits and drawbacks of each compute type and choose what works best for your environment and your application.